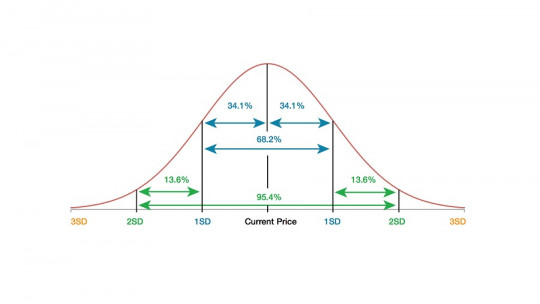

In research, regardless of the topic, it is known that extremes are very strange points and that they are rarely maintained. Obtaining an extreme score on a mathematical test, in a medical exam, or even by rolling some dice, are rare situations that, as they are repeated, will imply values closer to the average.

The idea of regression to the mean is the name given to increasingly closeness to central values Below we explain this concept, in addition to giving examples of it.

What is regression to the mean?

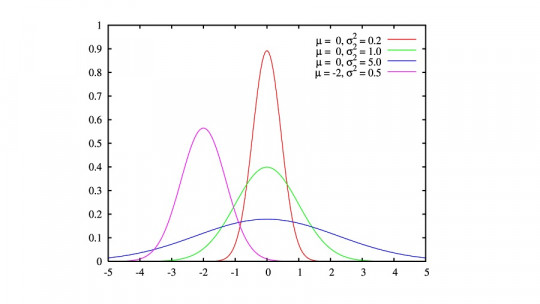

In statistics, regression to the mean, historically called reversion to the mean and reversion to mediocrity, is the phenomenon that occurs when, for example, If a variable has been measured and the first time an extreme value is obtained, in the second measurement it will tend to be closer to the mean Paradoxically, if it turns out that in your second measurement it gives extreme values, it will tend to be closer to the mean in your first measurement.

Let’s imagine that we have two dice and we roll them. The sum of the numbers obtained in each roll will give between 2 and 12, these two numbers being the extreme values, while 7 is the central value.

If, for example, in the first roll we have obtained a sum of 12, it is less likely that in the second we will have the same luck again. If the dice are thrown

The idea of regression to the mean It is very important in research, since it must be considered in the design of scientific experiments and the interpretation of data collected to avoid making wrong inferences.

History of the concept

The concept of regression to the mean It was popularized by Sir Francis Galton in the late 19th century speaking about the phenomenon in his work “Regression towards mediocrity in hereditary stature.”

Francis Galton observed that extreme characteristics, in the case of his study, parental height, did not appear to follow the same extreme pattern in their offspring. The children of very tall parents and the children of very short parents, instead of being respectively so tall and so short, had heights that tended towards mediocrity, an idea that today we modernly know as average. Galton got the feeling that It was as if nature was looking for a way to neutralize extreme values

He quantified this trend and, in doing so, invented linear regression analysis, thus laying the foundation for much of what modern statistics is. Since then, the term “regression” has taken on a wide variety of meanings, and can be used by modern statisticians to describe sampling bias phenomena.

Importance of regression to the mean in statistics

As we were already commenting, regression to the mean is a phenomenon of great importance to take into account in scientific research. To understand why, let’s look at the following case.

Imagine 1,000 people of the same age who have been tested to assess their risk of having a heart attack Of these 1,000 people, very varied scores have been seen, as expected, however, the focus of attention has been placed on the 50 people who have obtained a maximum risk score. Based on this, a special clinical intervention has been proposed for these people, in which changes in diet, greater physical activity and application of pharmacological treatment will be introduced.

Let’s imagine that, despite the efforts that have been made in developing the therapy, it has turned out to have no real effect on the patients’ health. Even so, in the second physical examination, carried out some time after the first examination, it is reported that there are patients with some type of improvement.

This improvement would be nothing more than the phenomenon of regression to the mean, with patients who, this time, Instead of giving values that suggest they have a high risk of having a heart attack, they have a slightly lower risk The research group could fall into the error that, indeed, their therapeutic plan has worked, but this is not the case.

The best way to avoid this effect would be to select patients and assign them, randomly, into two groups: one group that receives the treatment and another group that will act as a control. Based on the results obtained with the treatment group compared to the control group, the improvements may or may not be attributed to the effect of the therapeutic plan.

Fallacies and examples of regression to the mean

Many phenomena are attributed as wrong causes when regression to the mean is not taken into account.

1. The case of Horace Secrist

An extreme example is what Horace Secrist thought he saw in his 1933 book The Triumph of Mediocrity in Business (“The Triumph of Mediocrity in Business”). This statistics professor collected hundreds of data to prove that Profit rates in companies with competitive businesses tended to move towards the average over time. That is to say, at first they started very high but, later, they began to decline, either due to exhaustion or due to having taken too many risks because the tycoon was overconfident.

In truth, this was not the real phenomenon The variability of profit rates was constant over time, what happened was that Secrist observed regression to the mean, thinking that it was really a natural phenomenon that businesses that had high profits at the beginning stagnated over time. weather.

2. Massachusetts Schools

Another, more modern example is what happened in the evaluation of educational questionnaires in Massachusetts in 2000. In the previous year, the state’s schools were assigned educational objectives to achieve. This basically meant that The average of the school’s grades, among other factors, had to be above a value according to the educational authorities

After the year, the Department of Education obtained information on all the results of the academic tests administered in the state’s schools, tabulating the difference achieved by students between 1999 and 2000. Data analyzers were surprised to see that the schools that they had done worse in 1999, that they had not achieved the objectives of that year, they managed to achieve them the next. This was interpreted to mean that the state’s new educational policies were taking effect.

However, this was not the case. Confidence that educational improvements were effective was shattered when schools that had obtained the best scores in 1999 worsened their performance the following year. The issue was debated, and the idea that there had really been improvements in the schools that had obtained poor scores in 1999 was discarded, seeing that it was a case of regression to normality, indicating that educational policies had not been of much use.